Tensorboard Integration¶

Basic Usage¶

To use Tensorboard with stable baselines3, you simply need to pass the location of the log folder to the RL agent:

from stable_baselines3 import A2C

model = A2C('MlpPolicy', 'CartPole-v1', verbose=1, tensorboard_log="./a2c_cartpole_tensorboard/")

model.learn(total_timesteps=10000)

You can also define custom logging name when training (by default it is the algorithm name)

from stable_baselines3 import A2C

model = A2C('MlpPolicy', 'CartPole-v1', verbose=1, tensorboard_log="./a2c_cartpole_tensorboard/")

model.learn(total_timesteps=10000, tb_log_name="first_run")

# Pass reset_num_timesteps=False to continue the training curve in tensorboard

# By default, it will create a new curve

model.learn(total_timesteps=10000, tb_log_name="second_run", reset_num_timesteps=False)

model.learn(total_timesteps=10000, tb_log_name="third_run", reset_num_timesteps=False)

Once the learn function is called, you can monitor the RL agent during or after the training, with the following bash command:

tensorboard --logdir ./a2c_cartpole_tensorboard/

you can also add past logging folders:

tensorboard --logdir ./a2c_cartpole_tensorboard/;./ppo2_cartpole_tensorboard/

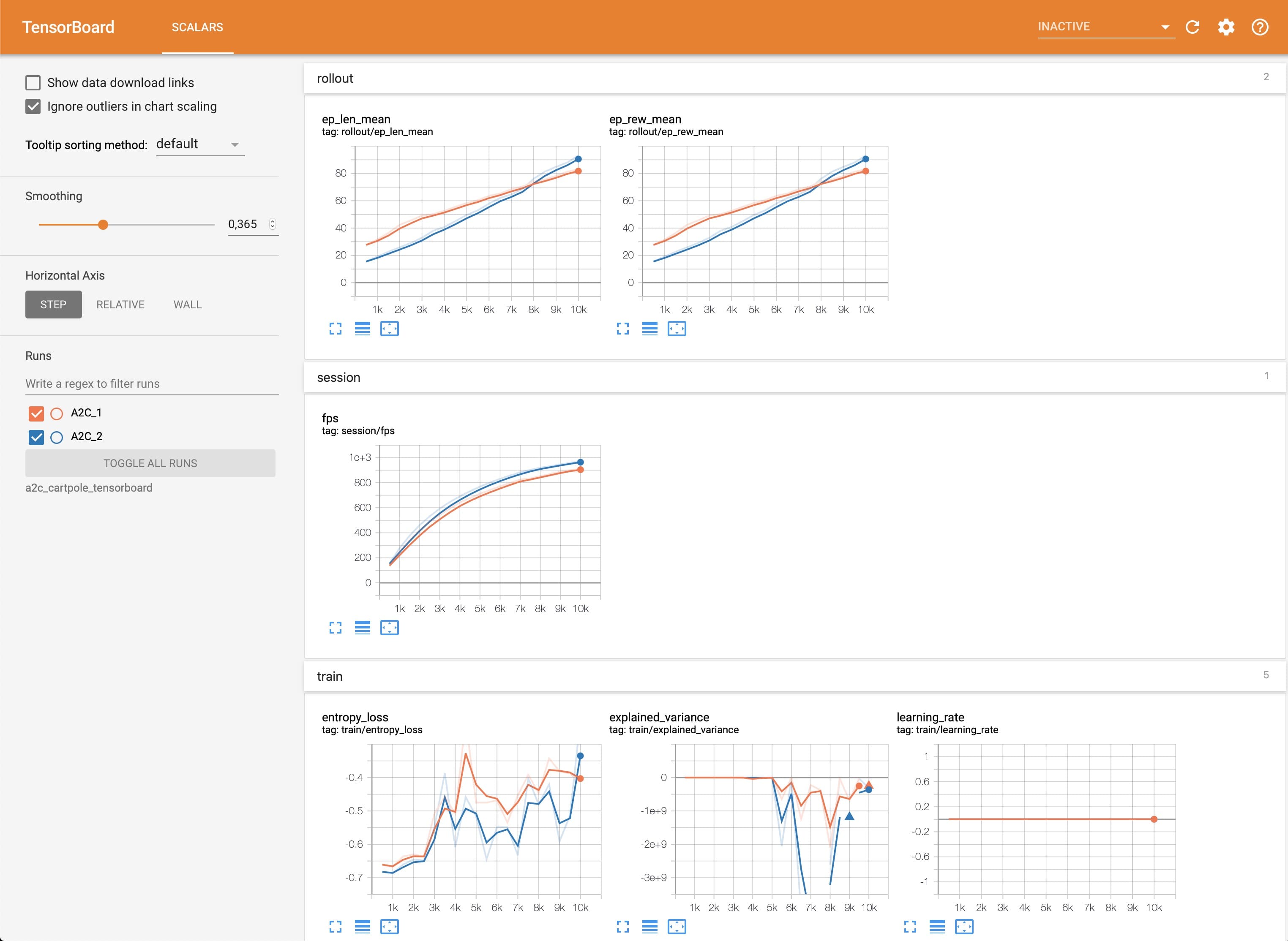

It will display information such as the episode reward (when using a Monitor wrapper), the model losses and other parameter unique to some models.

Logging More Values¶

Using a callback, you can easily log more values with TensorBoard. Here is a simple example on how to log both additional tensor or arbitrary scalar value:

import numpy as np

from stable_baselines3 import SAC

from stable_baselines3.common.callbacks import BaseCallback

model = SAC("MlpPolicy", "Pendulum-v0", tensorboard_log="/tmp/sac/", verbose=1)

class TensorboardCallback(BaseCallback):

"""

Custom callback for plotting additional values in tensorboard.

"""

def __init__(self, verbose=0):

super(TensorboardCallback, self).__init__(verbose)

def _on_step(self) -> bool:

# Log scalar value (here a random variable)

value = np.random.random()

self.logger.record('random_value', value)

return True

model.learn(50000, callback=TensorboardCallback())